Accelerate your AI agent adoption

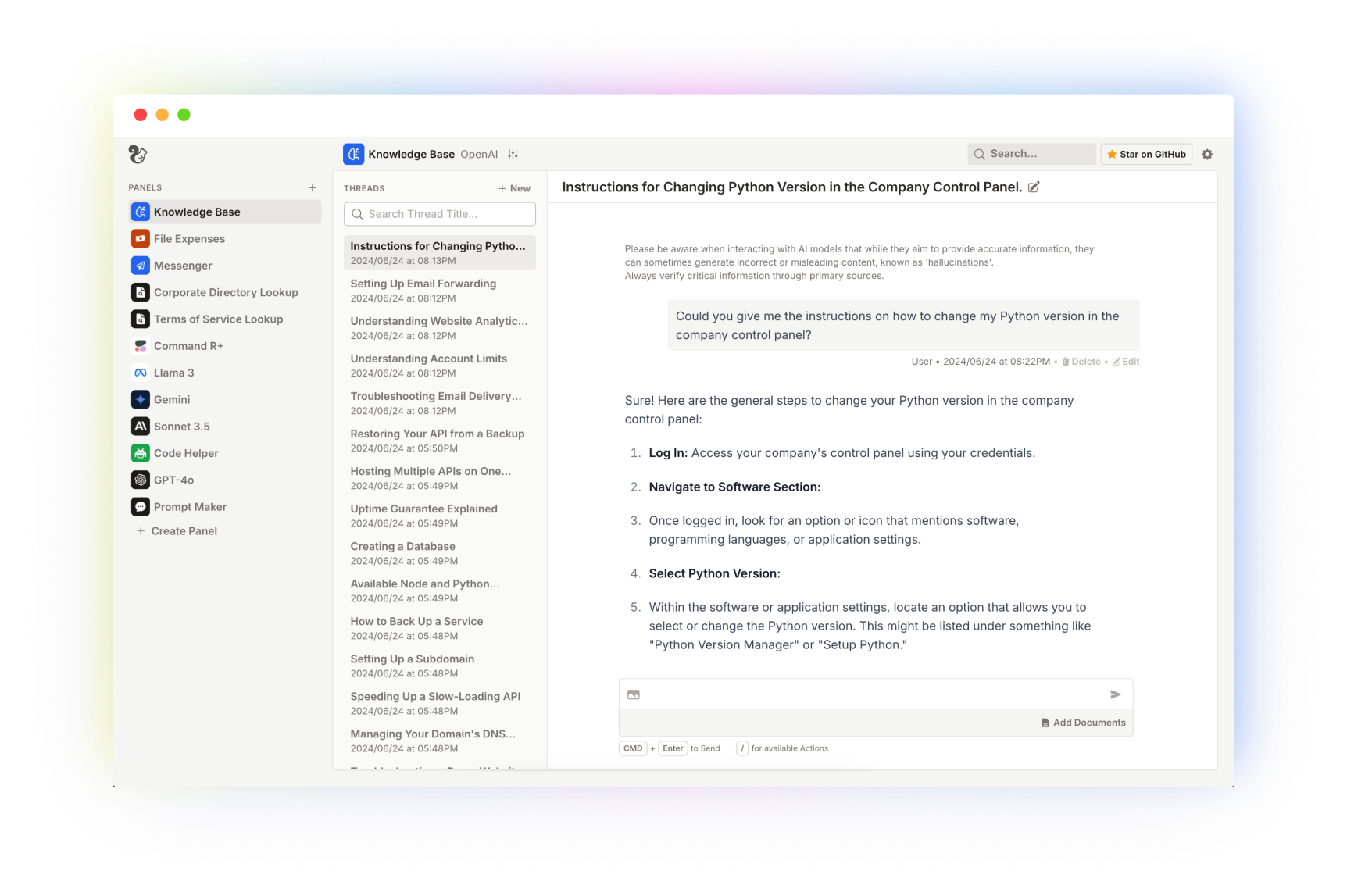

Your private, open-source,

assistant platform

Any large language model

Across any inference provider, any way you want. From commercial models like OpenAI, Anthropic, Gemini, or Cohere - to open source models, either hosted or running locally via Ollama.

Access controls

Assign users to agents without revealing your API tokens or credentials. Enable user sign-up and login with OpenID Connect (OIDC) single sign-on.

Bring your own data

Store it locally on your instance. Use it safely by pairing it with any language model or solution, whether online or offline.

Create agent plugins

Use Python to build your own agent plugins. Customize your AI agent capabilities, and create your own retrieval augmented generation (RAG) pipelines.

Build more with helpful starters.

Out-of-box primitives to help power-up your team with AI.

All your models.

Reference implementations for all major AI model providers.

Local inference using Ollama and GPT-compatible APIs as well.