Deep Dive: What We Learned From A Year of Building With LLMs

← Head back to all of our AI Engineer World's Fair recaps

Eugene Yan @eugeneyan / Amazon

Hamel Husain @HamelHusain / Parlance Labs

Jason Liu @jxnlco / Independent & Instructor

Dr. Bryan Bischof @bebischof / HEX

Charles Frye @charles_irl / Modal

Shreya Shankar @sh_reya / UCB EECS & EPIC Lab, UC Berkeley

Watch it on YouTube | AI.Engineer Talk Details

This talk, presented by multiple speakers from the "What We Learned from a Year of Building with LLMs" whitepapers, covered key lessons learned from a year of building with Large Language Models (LLMs).

You can find the original papers over here:

- https://www.oreilly.com/radar/what-we-learned-from-a-year-of-building-with-llms-part-i/

- https://www.oreilly.com/radar/what-we-learned-from-a-year-of-building-with-llms-part-ii/

The presenters divided their insights into strategic, operational, and tactical considerations.

Strategic Considerations

The speakers emphasized that the model itself is not the moat for most companies.

Instead, they advised:

- Leveraging existing product expertise

- Finding and exploiting a niche

- Building what model providers are not

- Treating models like any other SaaS product

A key focus was on continuous improvement, drawing parallels to concepts from MLOps, DevOps, and even the Toyota Production System.

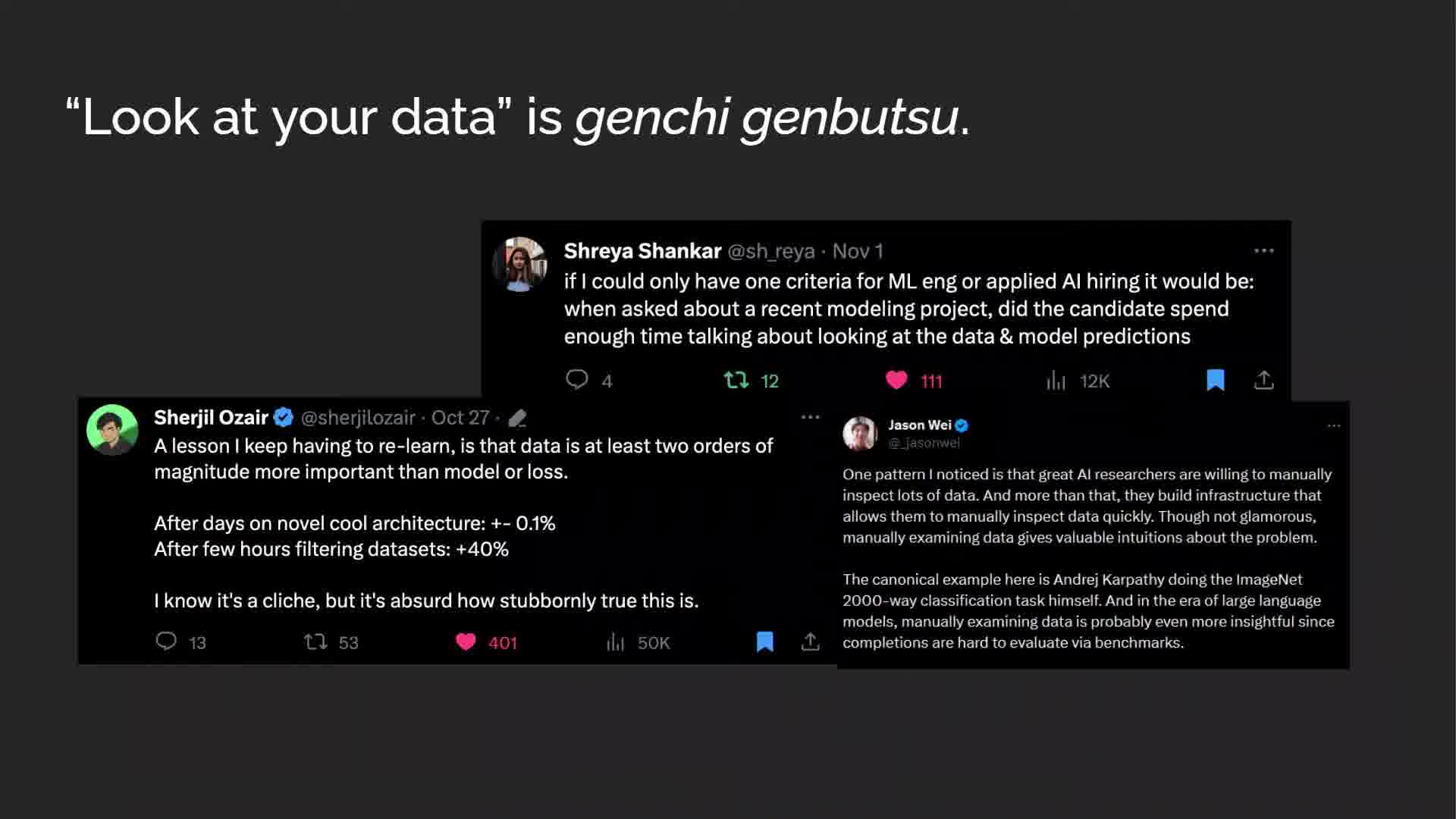

The speakers stressed the importance of looking at real data about how LLM applications deliver value to users.

An interesting point was made about projecting future capabilities:

There's been an order of magnitude decrease every 12 to 18 months at 3 distinct levels of capability.

This suggests planning for applications that aren't economical today but may be in the near future.

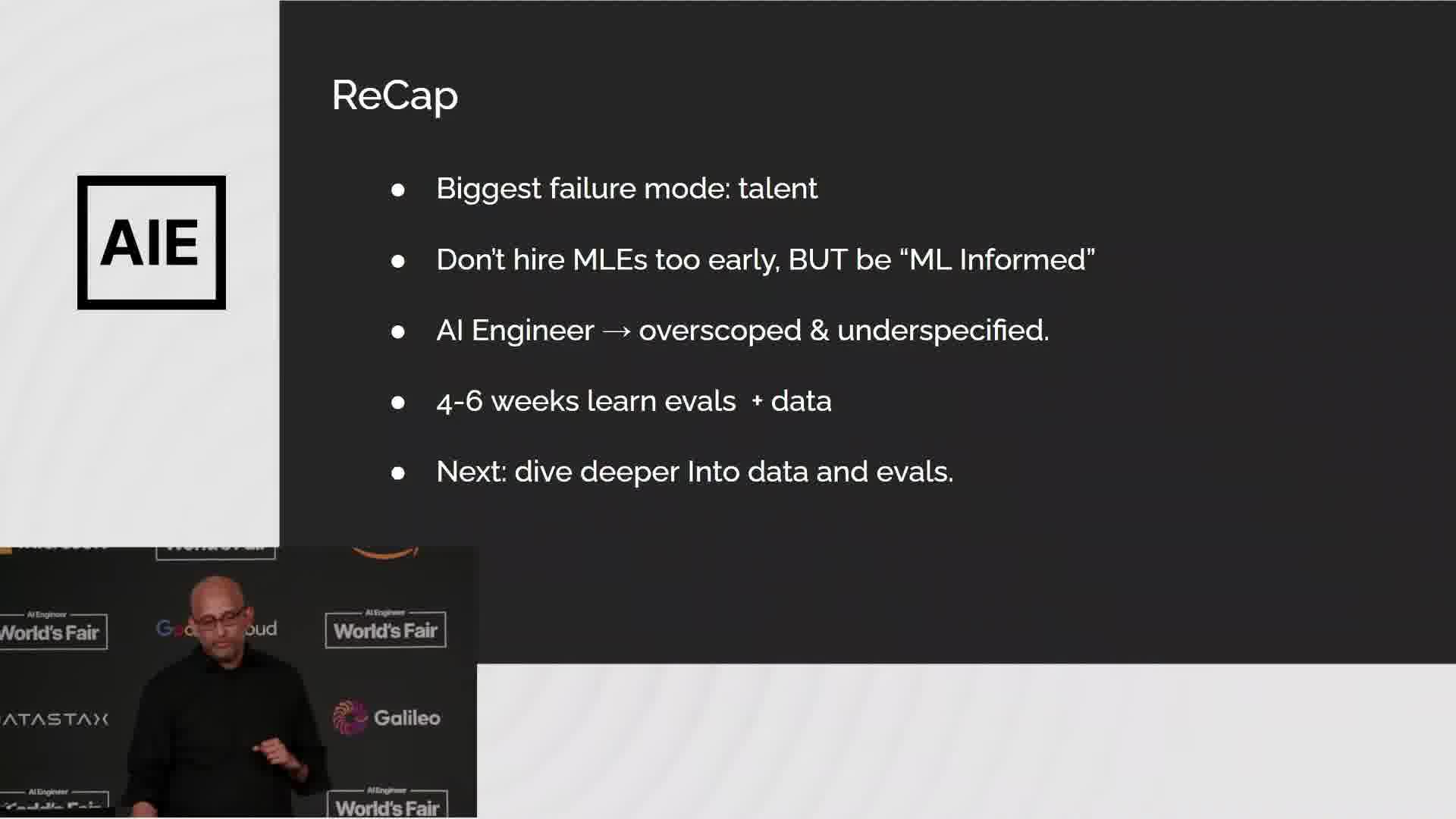

Operational Considerations

The speakers joke through their advice - showing how you too can "ruin your business" to illustrate common pitfalls:

- Overreliance on tools without developing expertise or processes

- Hiring machine learning engineers prematurely

- Using vague job titles like "AI engineer" without specific skill requirements

They emphasized the importance of data literacy and evaluation skills, suggesting that these can be developed with 4-6 weeks of deliberate practice.

Tactical Considerations

Evaluations (Evals)

The speakers stressed the importance of evals:

How important EVALS are to the team is a differentiator between teams shipping out hot garbage and those building real products.

They recommended:

- Breaking down complex tasks into simpler components

- Using assertion-based tests where possible

- Considering evaluator models for more complex criteria

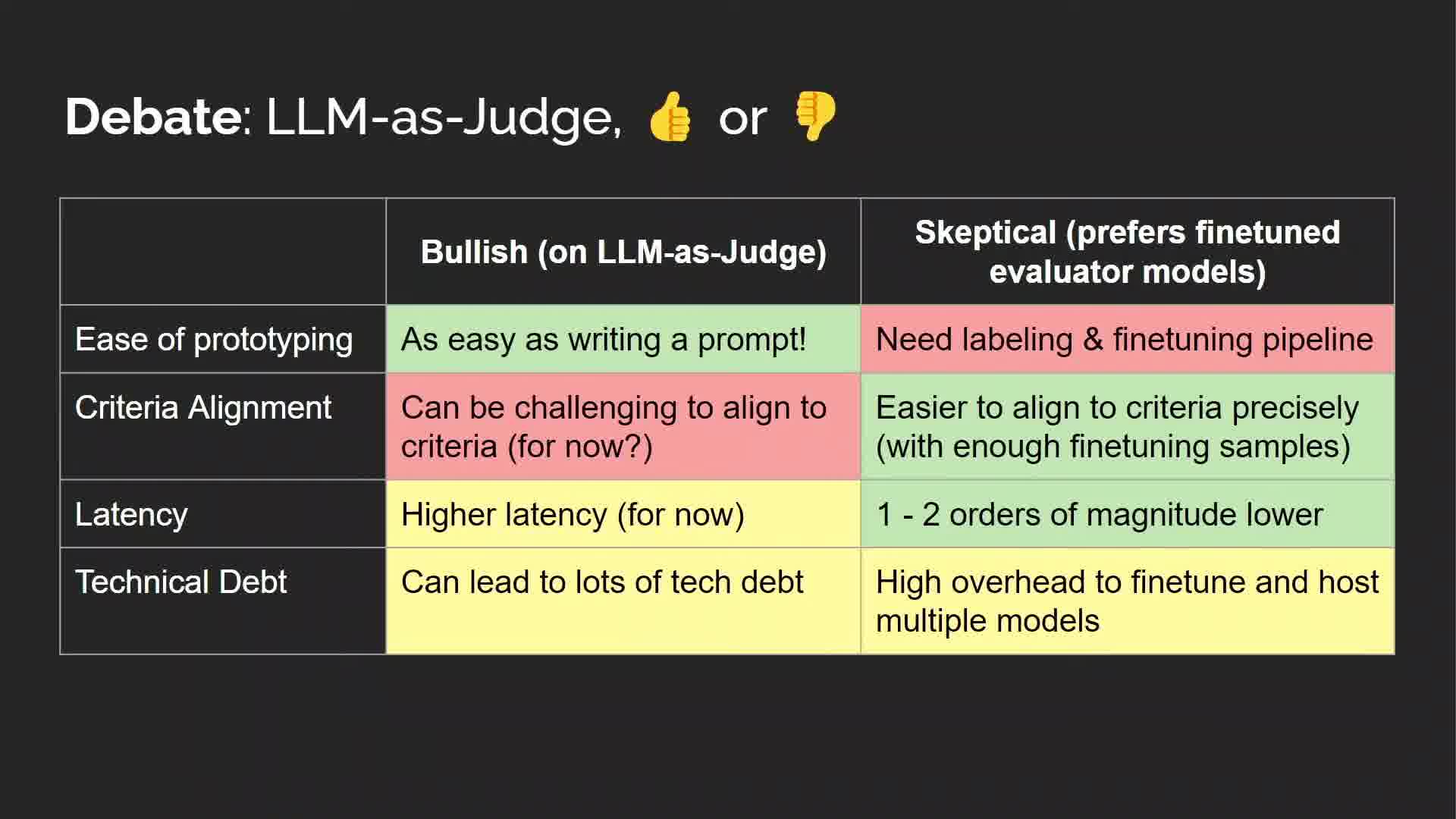

LLM as Judge

Pros:

- Easy to prototype

- Can be aligned with few-shot examples

Cons:

- Difficult to align precisely

- Slower than fine-tuned models

- Requires ongoing maintenance

Data Analysis

The speakers emphasized regular data inspection:

- Create dedicated channels for real-time agent outputs

- Look for easily characterizable data slices

- Track code, prompt, and model versions when inspecting traces

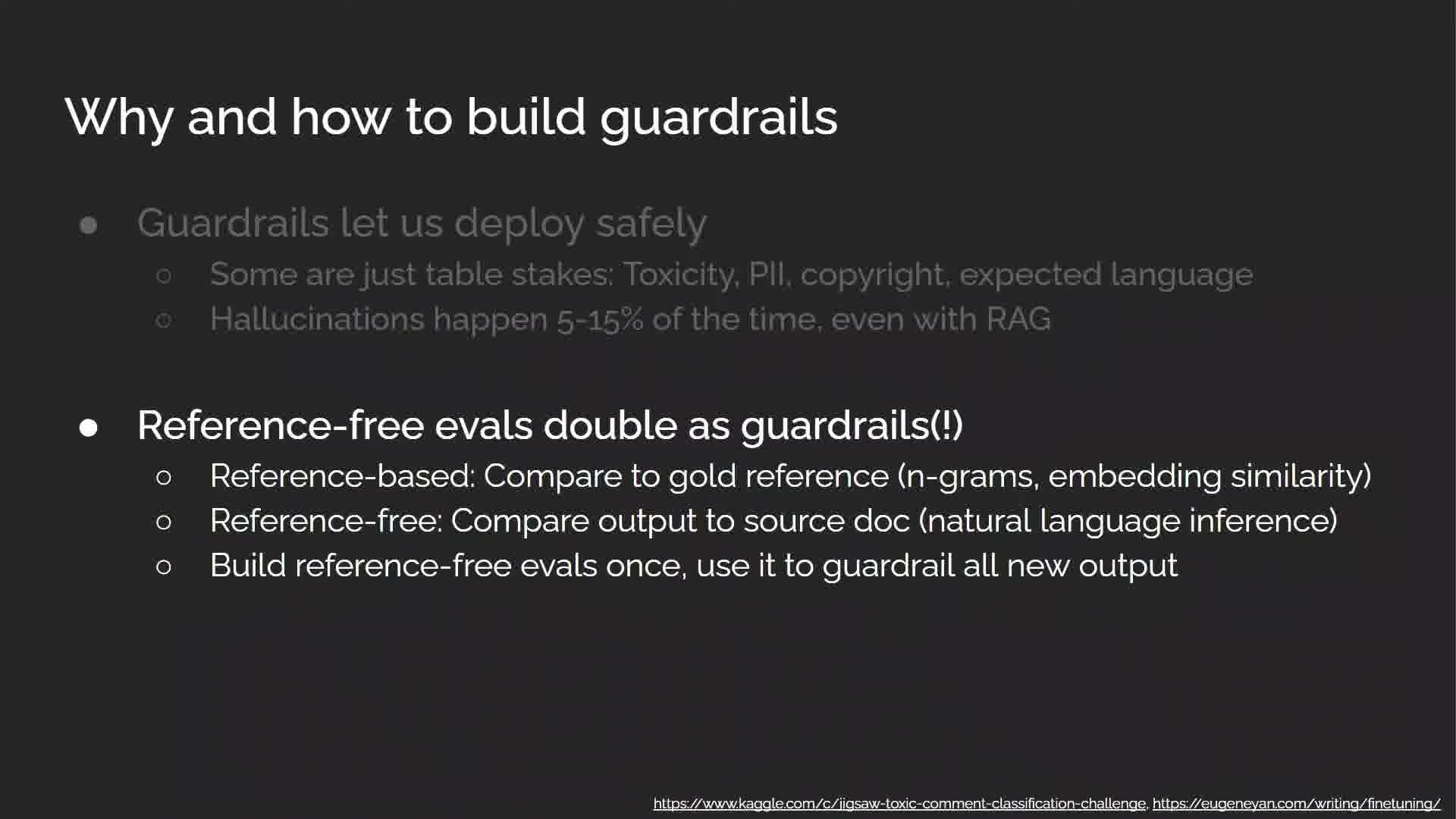

Guardrails

Automated guardrails were recommended for ongoing data monitoring, including checks for:

- Toxicity

- Personally identifiable information

- Copyright issues

- Expected language

The speakers suggested using reference-free evals as guardrails where possible.

Key Takeaway

The talk concluded by drawing parallels to traditional MLOps, emphasizing that many of the same principles apply to LLM applications.

They stressed that there's significant work required beyond simply wrapping a model in software.

This talk provided a comprehensive overview of LLM application development, balancing high-level strategy with practical tactics. The speakers' experience across multiple companies lent weight to their insights, making this a valuable resource for anyone working with LLMs.