Deep Dive: Unveiling the latest Gemma model advancements

← Head back to all of our AI Engineer World's Fair recaps

Kathleen Kenealy #kathleen-kenealy / Google

Watch it on YouTube | AI.Engineer Talk Details

Gemma is Google DeepMind's family of open source, lightweight, state-of-the-art models built using the same research and technology as their Gemini models. The speaker emphasized a few key advantages:

- Built with responsibility and safety as a top priority from day zero

- Breakthrough performance for models of their scale

- Highly extensible and optimized for various platforms (TPUs, GPUs, local devices)

- Supported across multiple frameworks (TensorFlow, JAX, Keras, PyTorch, Ollama, transformers)

- Open access and open license

Recent Additions to the Gemma Family

The speaker highlighted several recent additions to the Gemma model family:

- CodeGemma: Fine-tuned for improved performance on code generation and evaluation

- Recurrent Gemma: A novel state space model architecture designed for faster and more efficient inference, especially with long contexts

- PaLiGemma: A multimodal model combining the SigLIP vision encoder with the Gemma 1.0 text decoder, capable of various image-text tasks

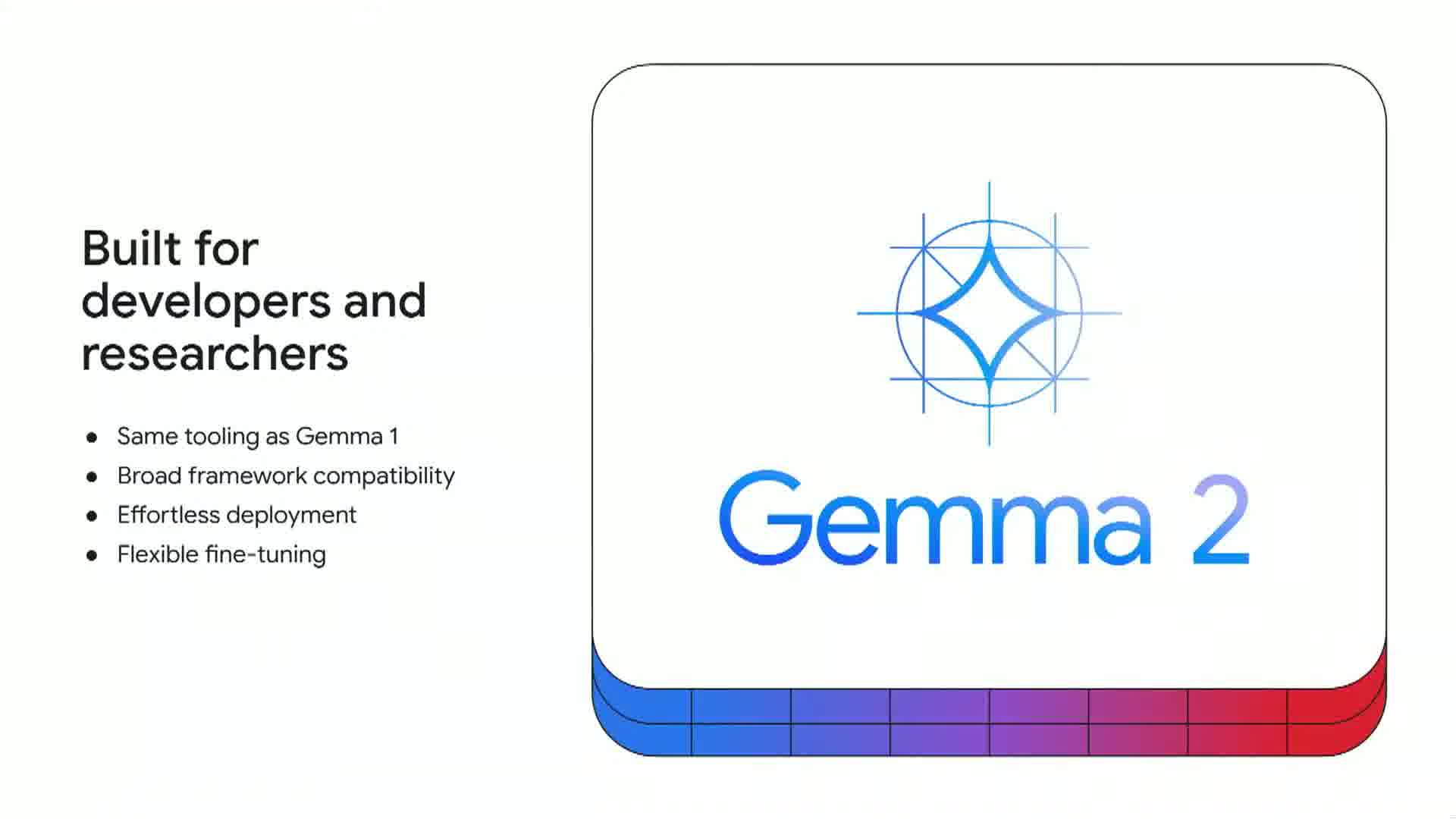

Gemma V2 Launch

The most exciting part of the talk was the announcement of Gemma V2, which was launched on the day of the conference. Key features include:

- Available in 9B and 27B parameter sizes

- Designed for easy integration into existing workflows

- Broad framework compatibility

- Improved documentation, guides, and tutorials

- Optimized for efficient fine-tuning

The speaker noted that the 27B model is now available in Google AI Studio for immediate experimentation.

You can find the 9B and 27B models here on HuggingFace:

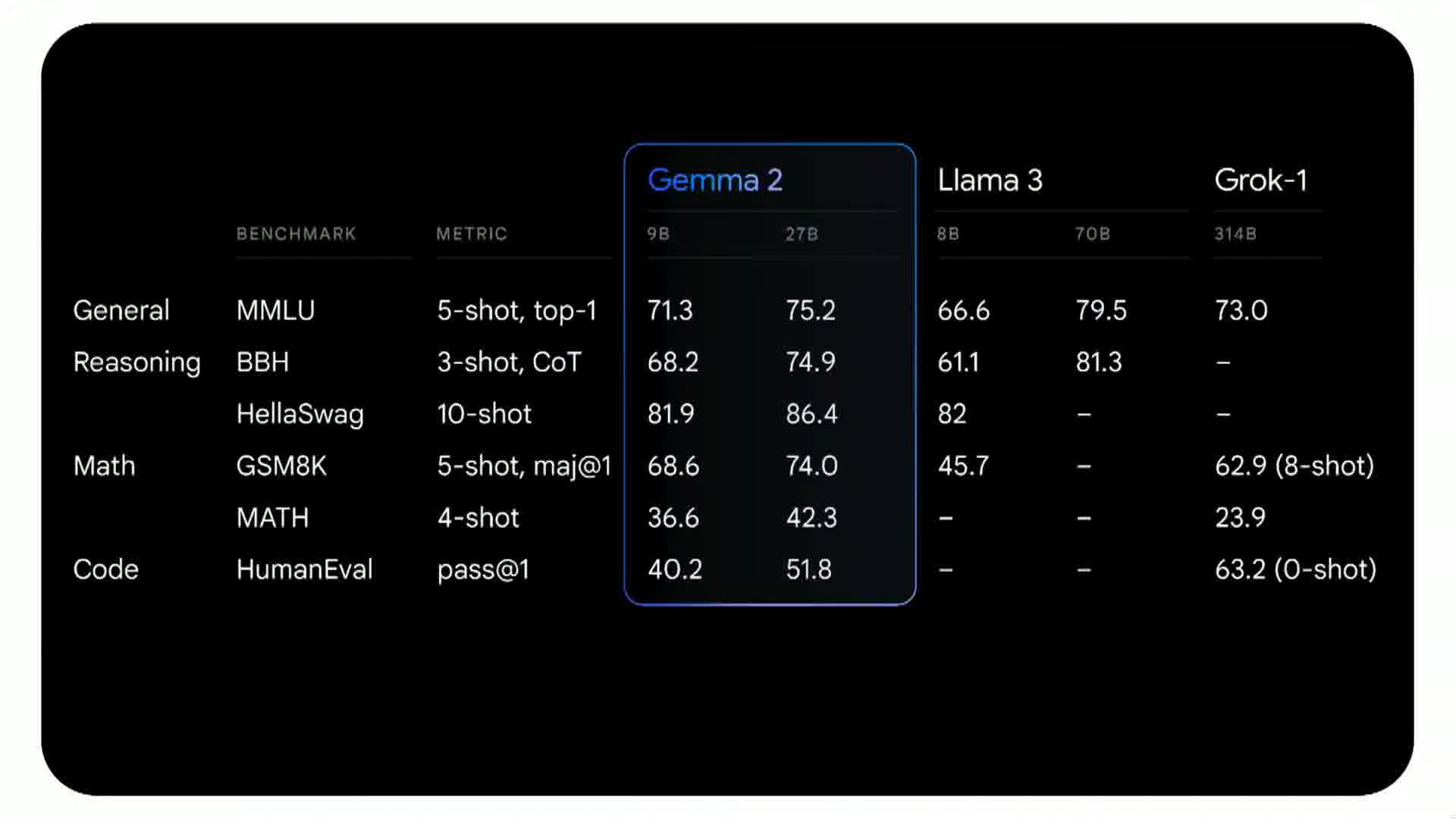

Performance Benchmarks

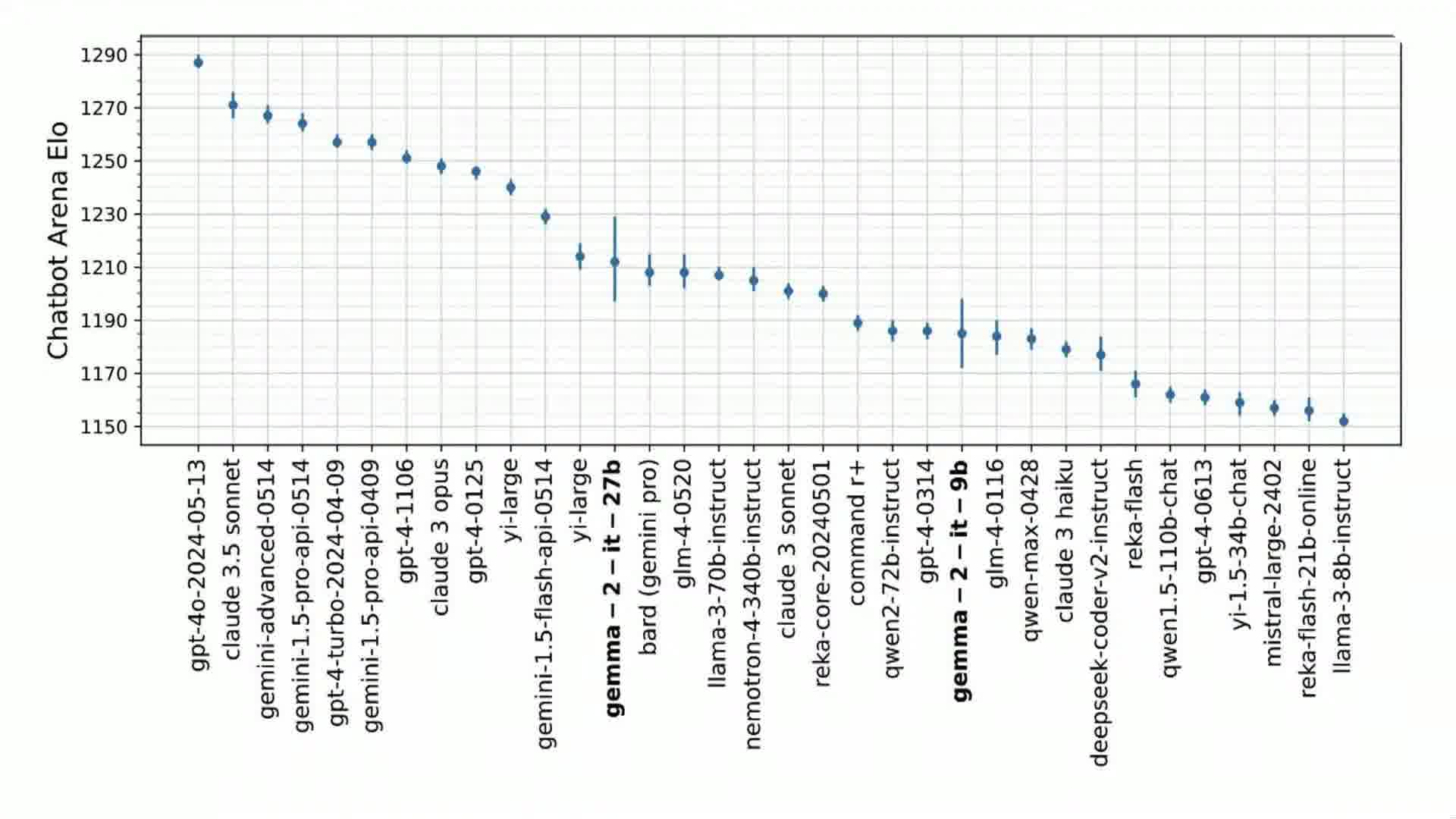

The presenter shared some impressive performance metrics for Gemma V2:

- Outperforms significantly larger models, including LLAMA 70B and Grok models on many benchmarks

- The 27B model is currently the top open model of its size on the LMSYS leaderboard

- Only outperformed by the Mixtral 8x7B model among open models of any size

Gemma Cookbook

A new resource called the Gemma Cookbook was introduced. It contains 20 different recipes ranging from easy to advanced applications of the Gemma models. Notably, the cookbook is open for pull requests, allowing the community to contribute their own use cases and applications.

You can find it here:

https://github.com/google-gemini/gemma-cookbook

Use Cases

The speaker mentioned a couple of interesting applications of Gemma models:

- Fine-tuning Gemma to achieve state-of-the-art performance on over 200 variants of Indic languages

- A small business application that prioritizes orders from unstructured email inputs

This talk showcased Google's commitment to open source AI and highlighted the rapid advancements in the field. The Gemma V2 models, in particular, seem to offer an impressive balance of performance and accessibility for developers and researchers.