Deep Dive: Moondream: how does a tiny vision model slap so hard?

← Head back to all of our AI Engineer World's Fair recaps

Vikhyat Korrapati @vikhyatk / M87 Labs

Watch it on YouTube | AI.Engineer Talk Details

Overview

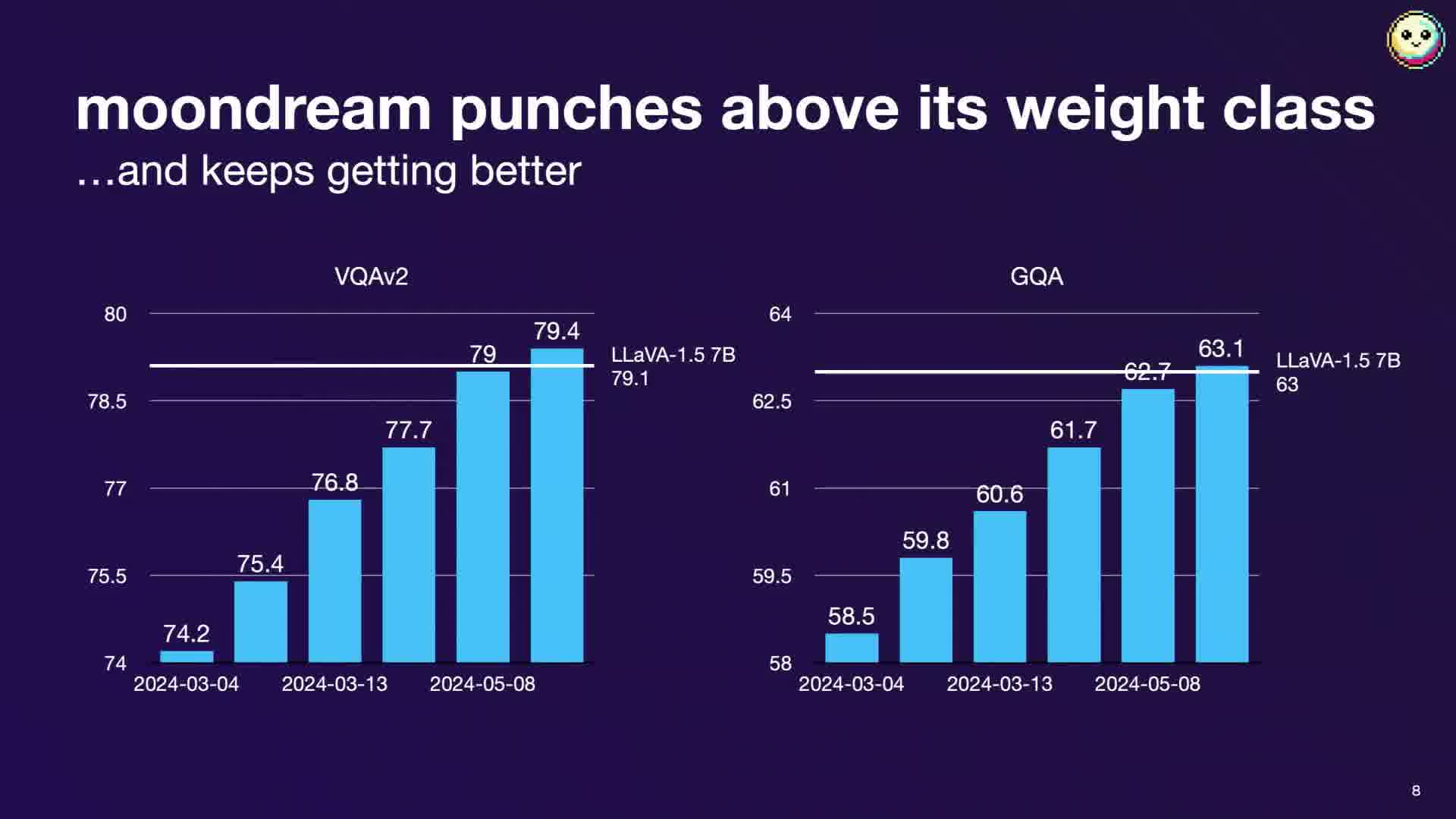

Vik presented Moonbeam, an open-source vision language model with less than 2 billion parameters. Despite its small size, Moonbeam performs comparably to models 4 times larger, like Lava 1.5.

Key Features of Moonbeam

- Apache 2.0 licensed, allowing for flexible use

- Capable of image captioning, object detection, and answering questions about images

- Focused on accuracy and avoiding hallucinations

- Designed as a developer tool rather than a general-purpose AI

Technical Details

Model Architecture

- Fuses Google's SegLIP vision encoder with Microsoft's PHI 1.5 text model

- Utilizes pre-trained models instead of training from scratch ($$$)

Training Data

- Trained on approximately 35 million images

- Relies heavily on synthetic data due to the high cost of human-annotated datasets

Synthetic Data Generation

- Uses a sophisticated pipeline to process existing datasets like COCO and Localized Narratives

- Employs careful prompt engineering to avoid hallucinations and model biases

- Injects entropy into the data generation process to prevent model collapse

Key Learnings

- Community engagement was crucial for development and adoption

- Open-source nature facilitated community contributions and enterprise trust

- Safety guardrails are best implemented at the application layer for dev tools

- Tiny models have significant advantages in terms of efficiency and deployment flexibility

- Prompting offers a superior developer experience compared to custom model training

Demo

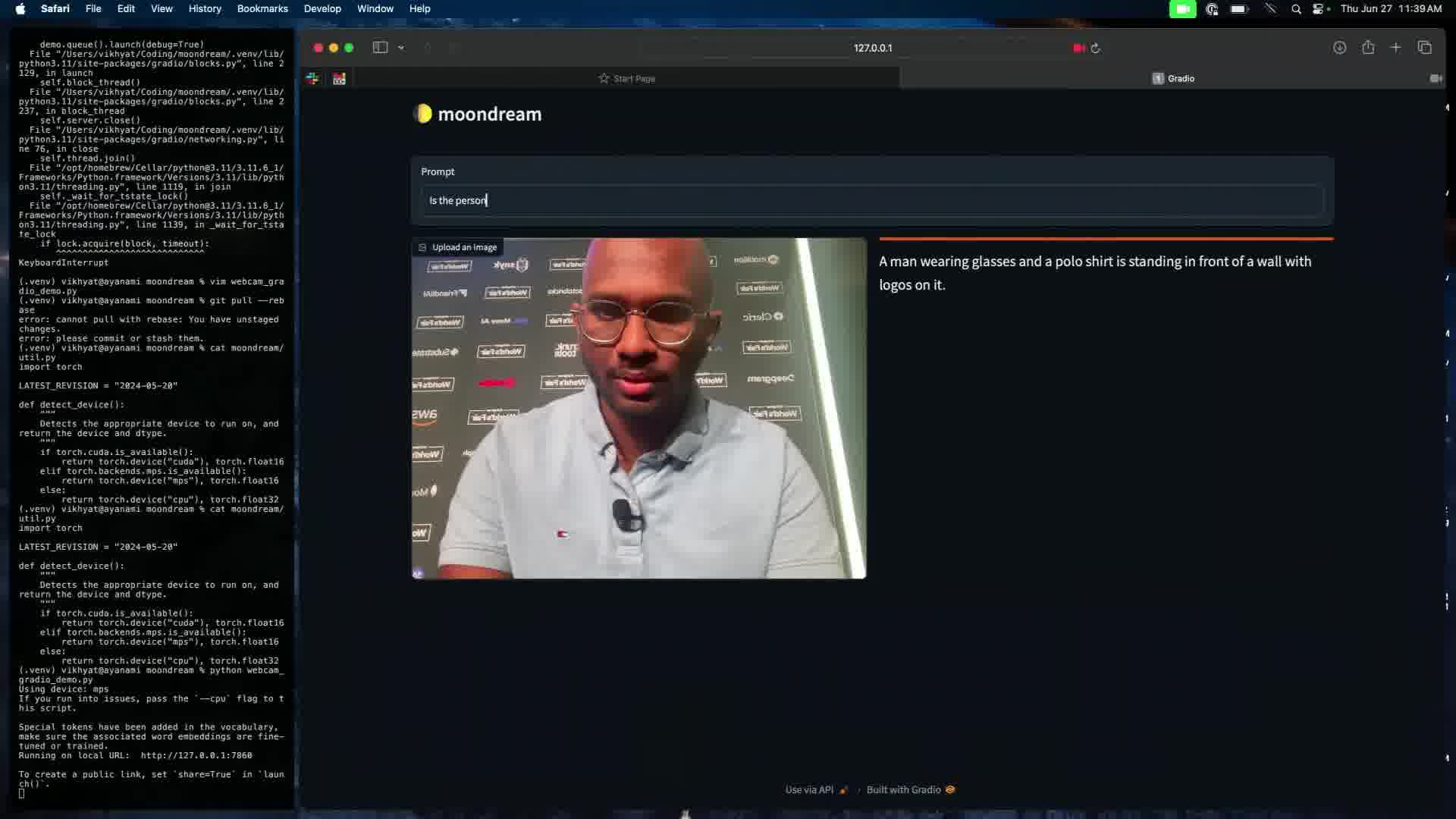

Vik attempted a live demo using a webcam and Moonbeam running locally to describe what it sees in real-time. The demo showcased the model's ability to answer questions about the scene, such as whether the speaker was wearing glasses.

Future Directions

- Working on more compressed image representations to improve processing speed

- Raised seed funding and expanding the team and planning a major release later in the summer

Summary

Get Moondream over here on HuggingFace.

This talk provided a deep dive into the development of a compact yet powerful vision language model.

Vik's insights on synthetic data generation and the advantages of smaller models for real-world applications were particularly noteworthy. The emphasis on developer experience and open-source collaboration sets Moonbeam apart in the crowded field of AI models.