Deep Dive: Llamafile: bringing AI to the masses with fast CPU inference

← Head back to all of our AI Engineer World's Fair recaps

Justine Tunney @justinetunney / Mozilla

Stephen Hood @stlhood / Mozilla

Watch it on YouTube | AI.Engineer Talk Details

Overview

Steven Hood and Justine Tunney from Mozilla presented their work on LAMAFILE, an open-source project aimed at making AI more accessible. The talk covered two main aspects:

Creating single-file executables from AI model weights

Improving CPU inference speed for AI models

Key Technical Points

Single-File Executables

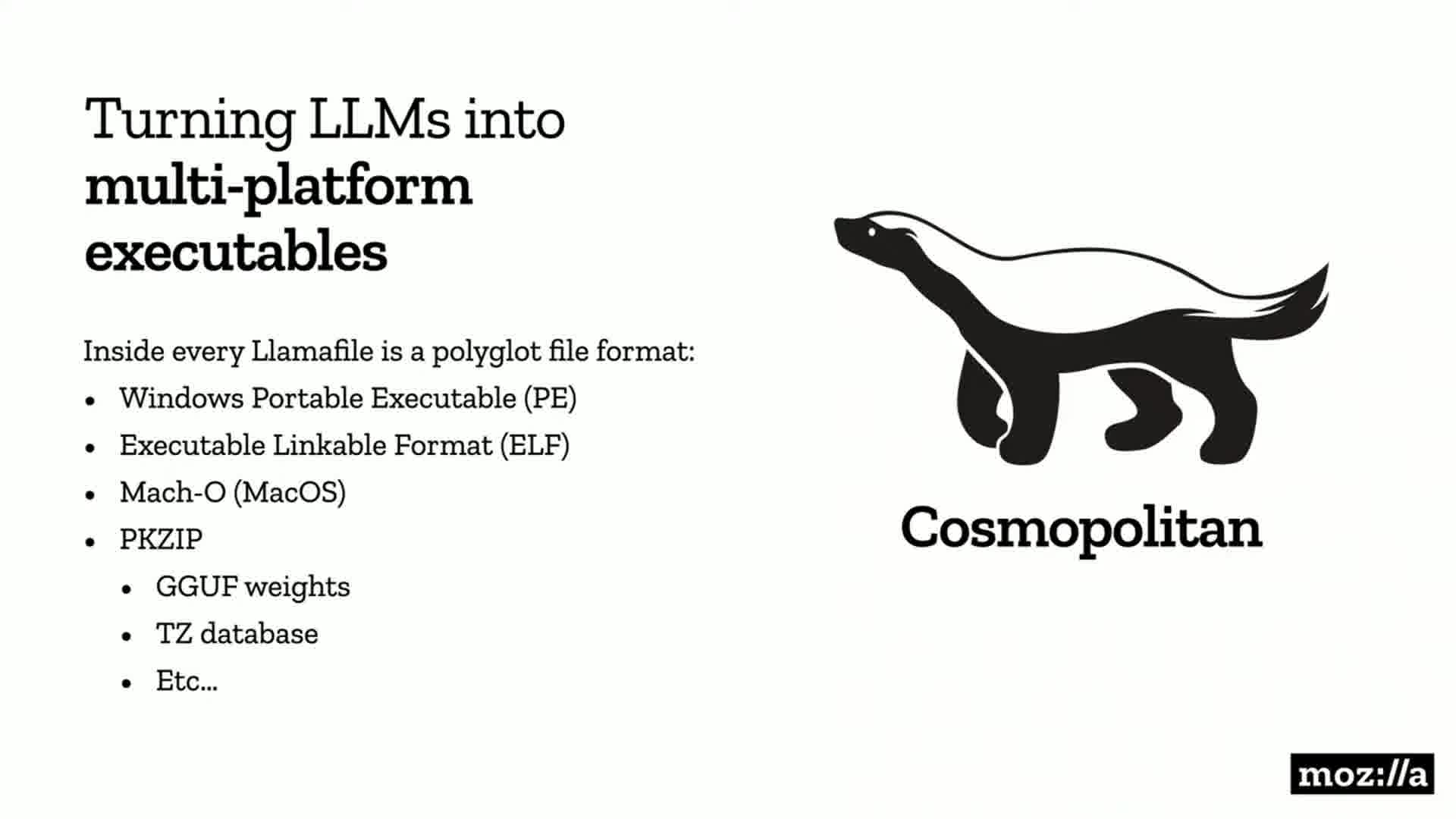

- Llamafile converts AI model weights into a single executable file

- Runs on multiple operating systems and hardware architectures without installation

- Uses a clever hack: combining a Unix shell script with an MS-DOS stub in a portable executable

CPU Inference Speed Improvements

Justine Tunney shared several techniques they've used to boost CPU performance:

-

Matrix Multiplication Optimization

- Unrolling the outer loop instead of the inner loop

- This approach helps the algorithm "BLAS kernel"

- Resulted in 35-100% speed increases depending on hardware and model

-

GPU-inspired Programming Model

- Implemented CPU version of CUDA's syncthreads() function

- Encourages "lockstep programming model" for CPUs

-

Community Contributions

- Improvements to quantized formats

- Performance boosts on both x86 and ARM architectures

Demo

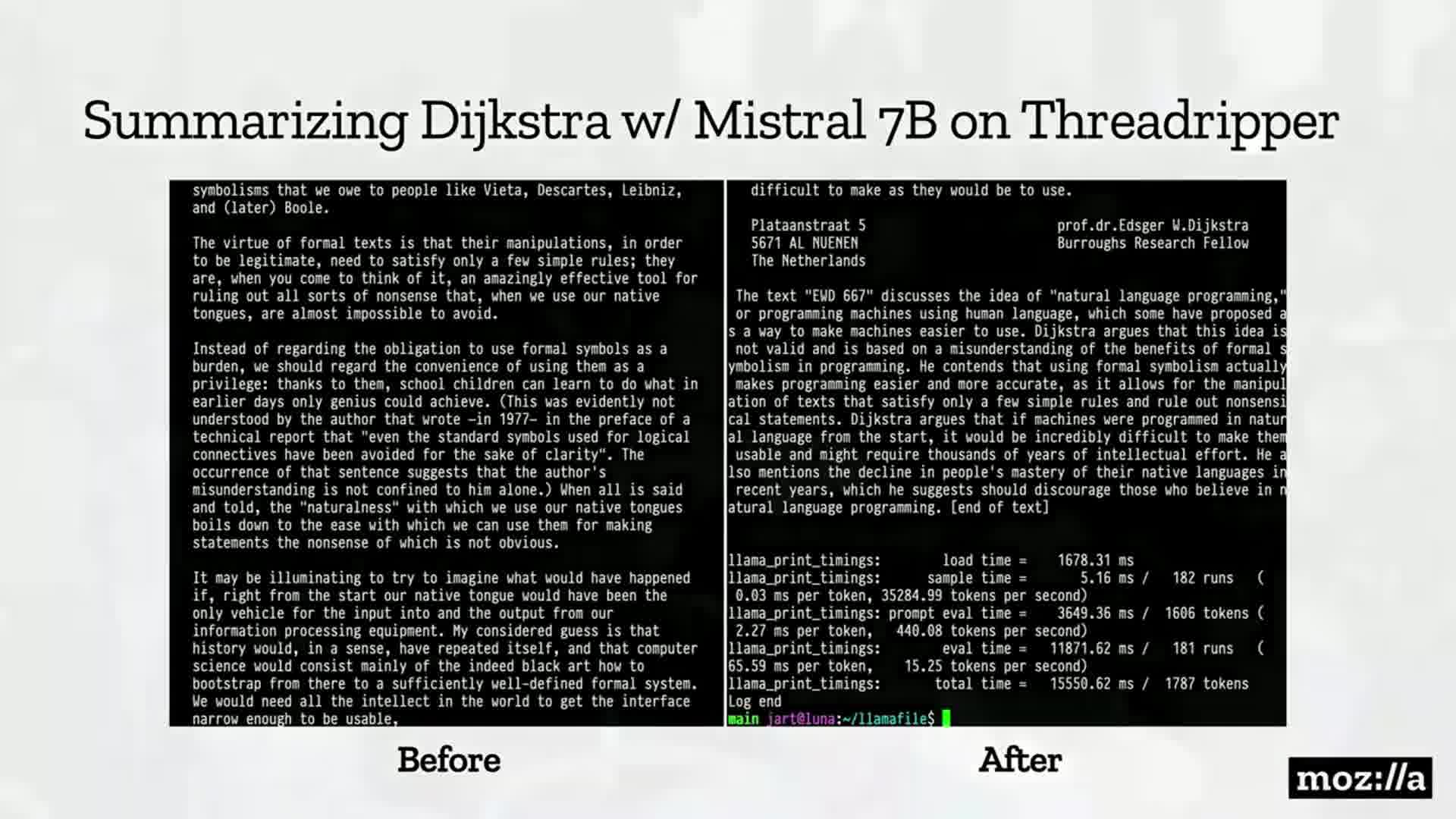

The speakers showed a live demo comparing the old and new versions of Llamafile for text summarization:

- Task: Summarizing an essay by Dijkstra

- Result: The new version processed the text significantly faster, finishing the summarization while the old version was still processing

Additional Resources

- Cosmopolitan library (used for cross-platform compatibility)

- TinyBLAS library (for GPU support without SDK dependencies)

- Mozilla Builders Accelerator

- SQLite VEC project by Alex Garcia

Summary

This talk really highlighted the potential for making AI more accessible and efficient on consumer-grade hardware. The focus on CPU optimization is particularly interesting, as it opens up possibilities for running advanced AI models on a wider range of devices.

The single-file executable approach could be a game-changer for AI deployment, especially for developers working on cross-platform applications. It's worth keeping an eye on how this technology develops and potentially integrates with existing AI workflows.

For those interested in the nitty-gritty of performance optimization, Justine's insights on matrix multiplication and GPU-inspired CPU programming are definitely worth a deeper dive. These techniques could have applications beyond just AI, potentially benefiting other computationally intensive tasks.

Lastly, Mozilla's efforts to support open-source AI development through their Builders program and accelerator are encouraging. It'll be interesting to see what kind of projects emerge from these initiatives and how they might shape the future of accessible AI.