Your First Message

Get Started with Your First Message

The following guide will help you get started making your first requests to a large language model (LLM) using local inference through Ollama.

This guide picks up from our quickstart where you can see your PromptPanel dashboard at: http://localhost:4000.

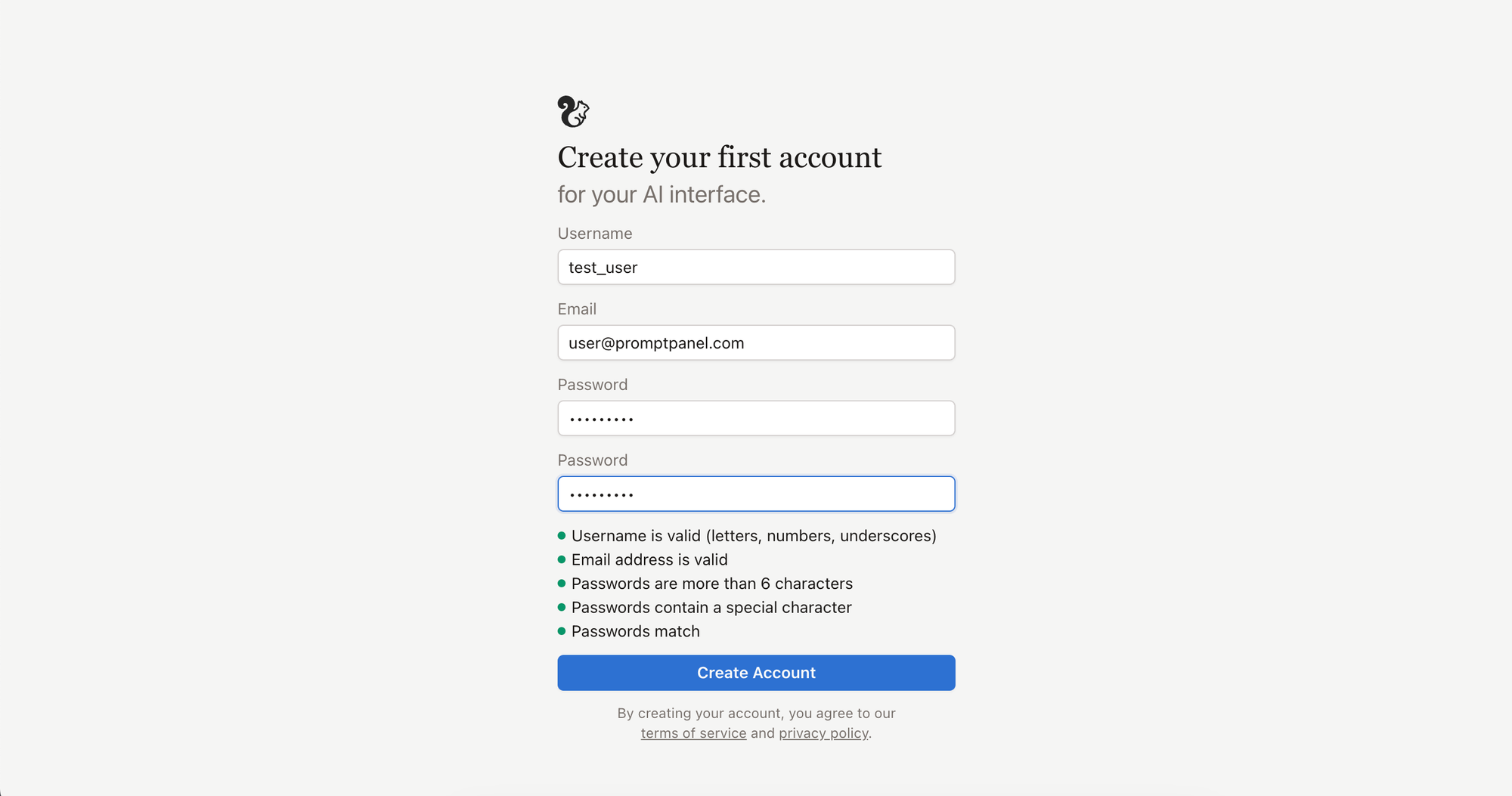

Step 1: Create a New Administrator Account

Create a new administrator account for your instance.

This user account will have the ability to add and manage new users later on from the settings.

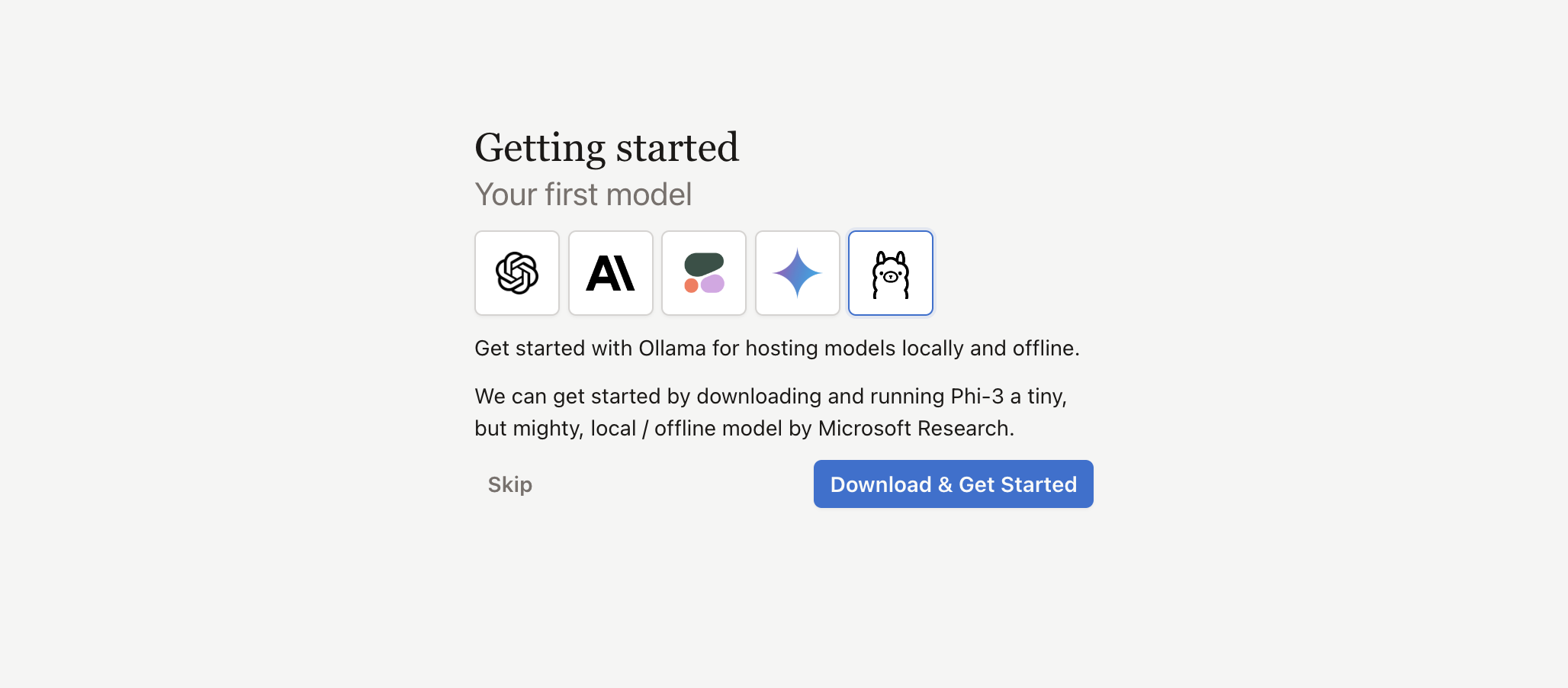

Step 2: Download a Model with Ollama for Offline Use

Download the Phi-3 offline / local model by Microsoft for Ollama.

Finding the Right Model

The Phi-3 model is a very good small model for use on your local machine. We recommend trying out some larger models (7B+) if your machine is capable.

You can find a listing of models available using the Ollama Library.

If you find that models are slow on your machine, we recommend using a commercial models by OpenAI, Google Gemini, or Anthropic for inference.

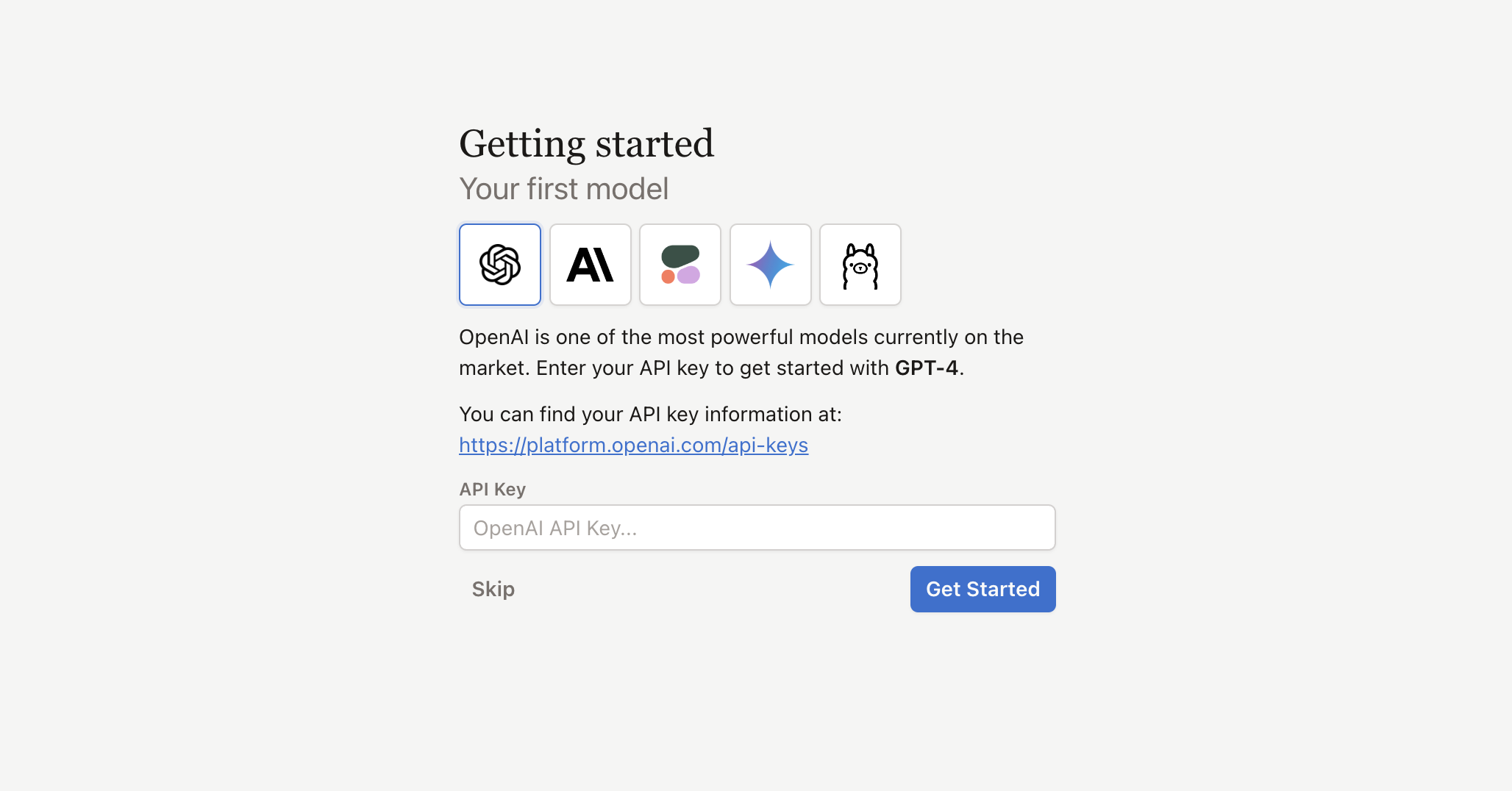

Step 2 (Alt): Enter Your API Key for a Commercial Model

Select your model provider from OpenAI, Anthropic, Cohere, or Google.

Enter your API key from your selected provider and hit "Get Started".

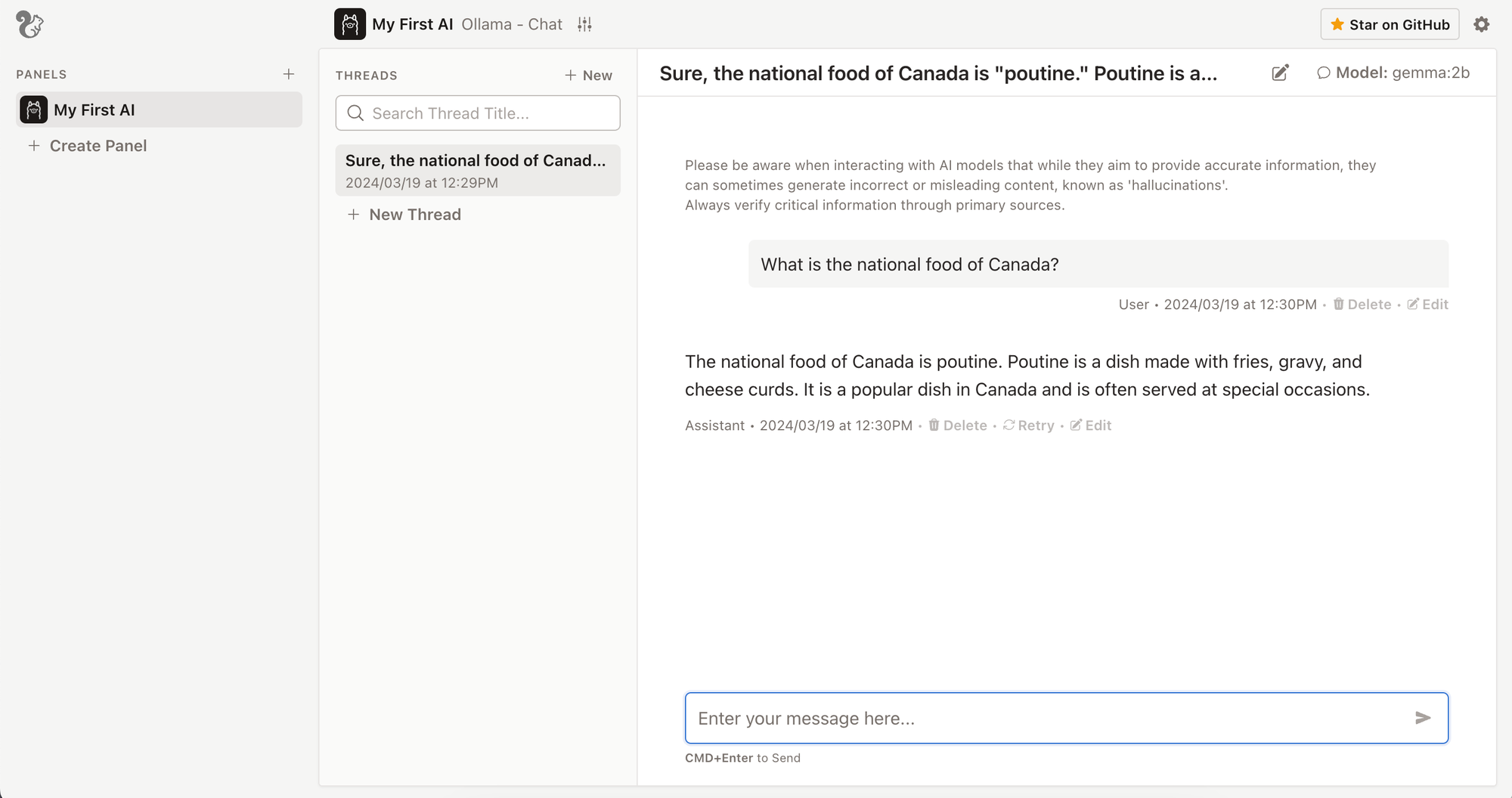

Step 3: Send Your First Message

Send your first LLM message.

Note: We would accept poutine for this answer, also maple syrup.

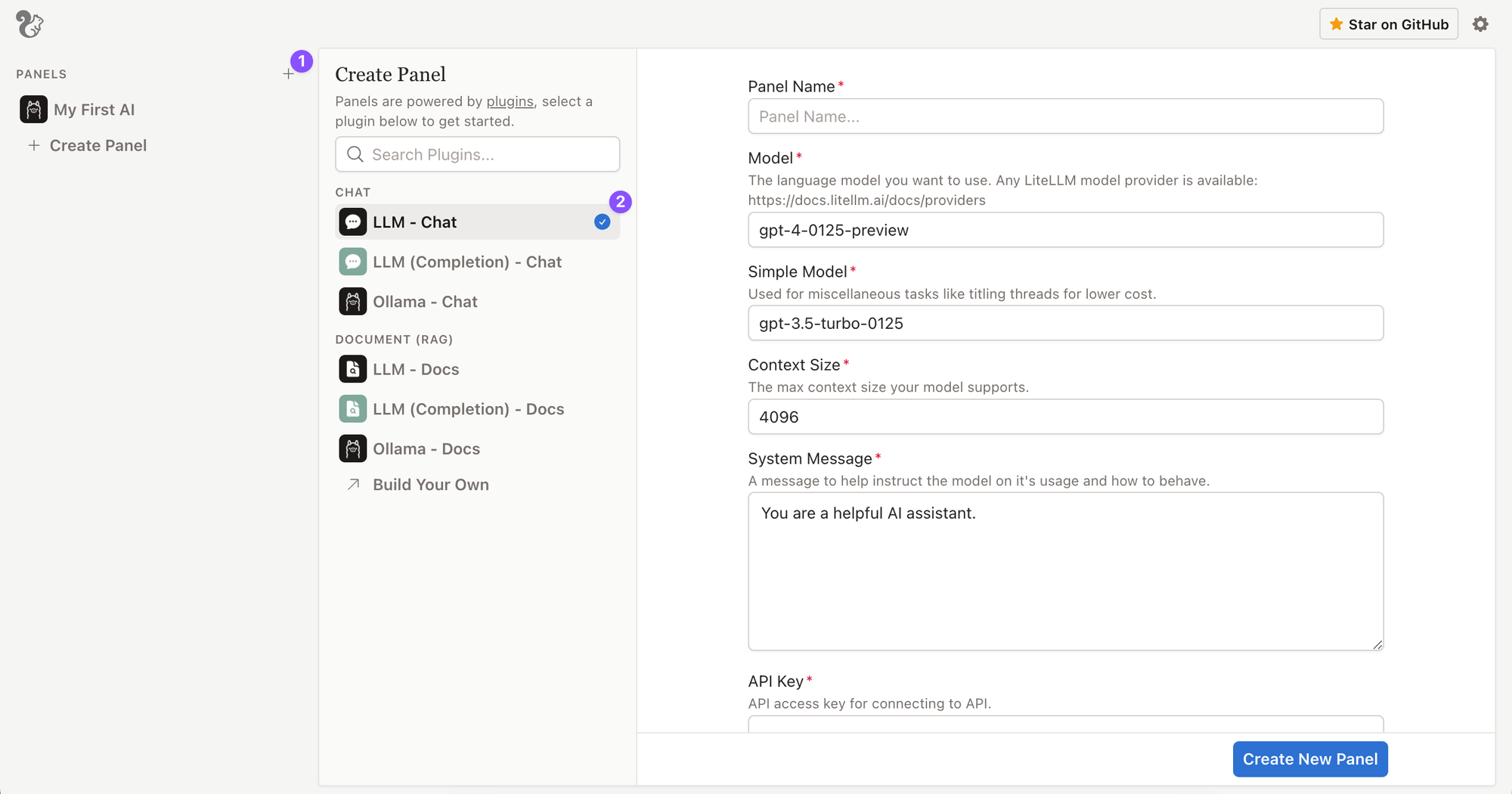

Step 4: Optional: Create Another Panel

- Selecting the new button will start the create panel flow.

- Select a plugin from the list to create a new panel.

- Think of panels as if they're applets or bots (more info here).

- Plugins contain configuration information, and also the logic of how your panel will function.

- Enter the required information and select

Create Plugin

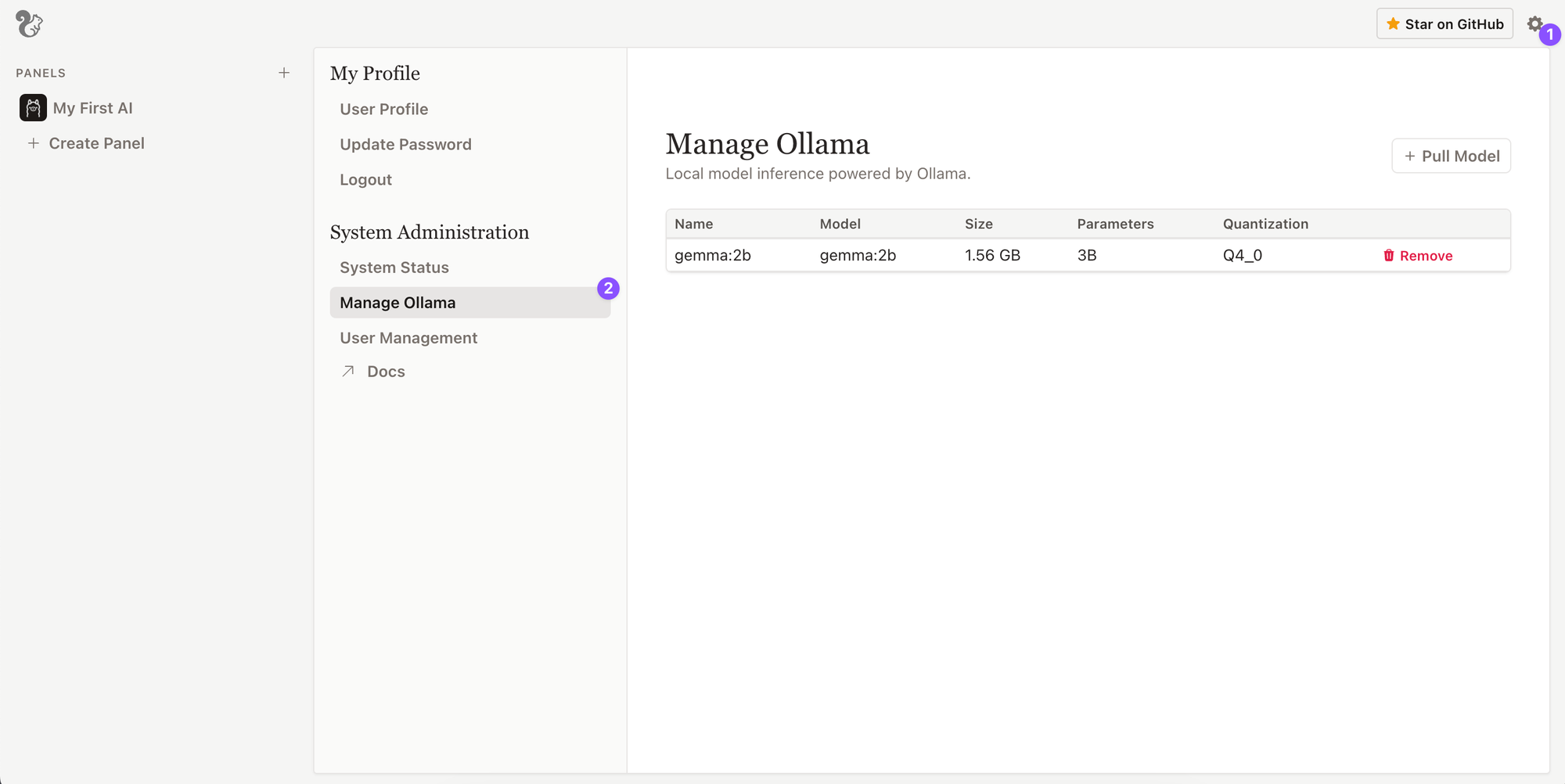

Step 5 (Optional): Manage Your Models

- Selecting the settings button will bring up your settings.

- Select a

Manage Ollamato bring up a list of models - from here you'll be able to:- Pull new models from the Ollama library (https://ollama.com/library)

- Remove models which have been pulled to your system.

- Note: Any other models loaded externally to Ollama will be available here as well.

- Note: Only Administrator users will have access to the

Manage Ollamafunctions.