Ollama (Local Model) Management

PromptPanel Pro streamlines the management of your large language models hosted on a connected Ollama instance.

Through a user-friendly interface, you can conveniently interact with your Ollama models directly within PromptPanel Pro, eliminating the need to switch between multiple platforms.

Environment Variable

To enable Ollama management, you'll need to configure the PROMPT_OLLAMA_HOST environment variable during container startup.

This variable should point to the address of your active Ollama inference server, establishing the connection between PromptPanel Pro and Ollama.

Management Interface

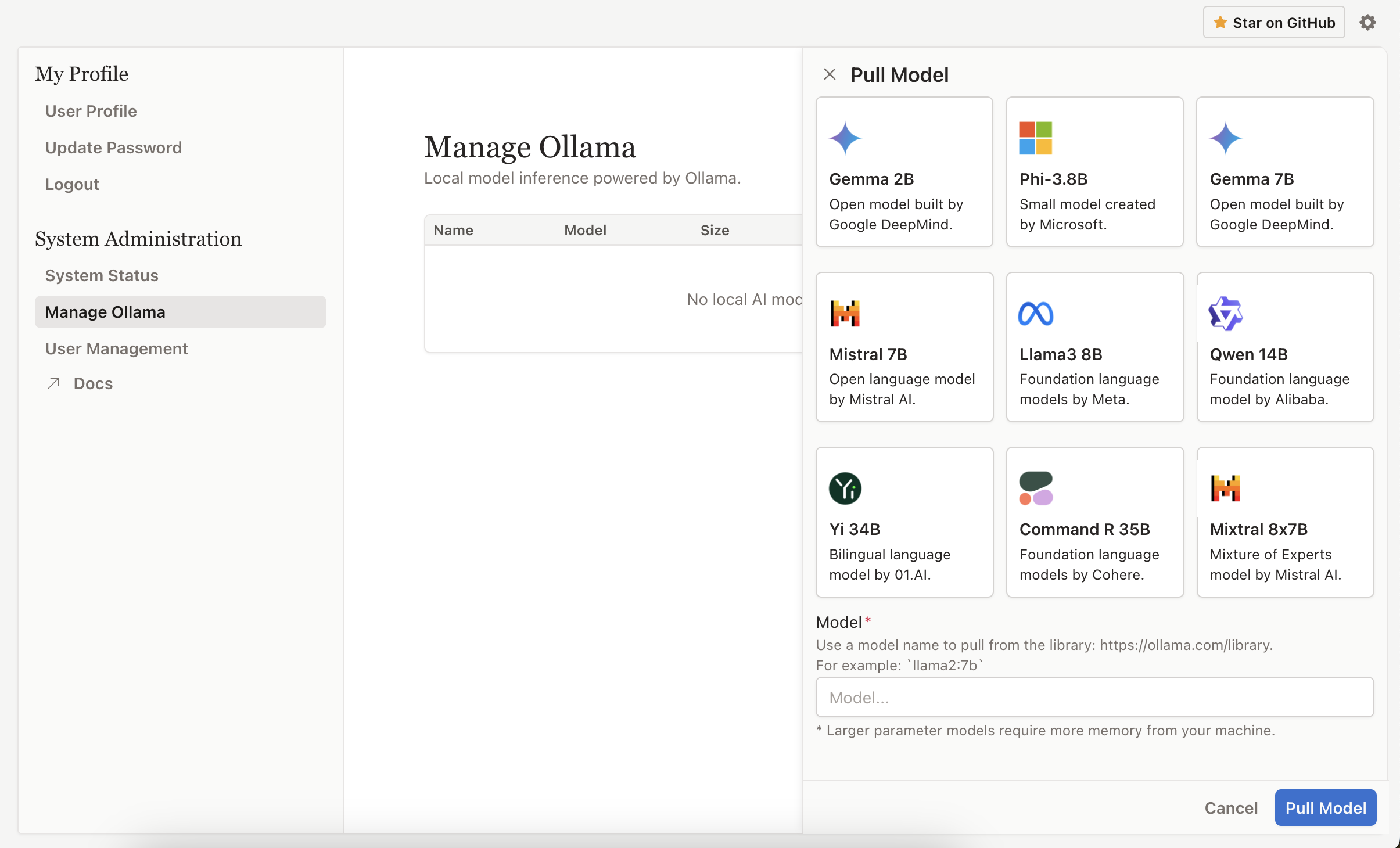

Once the environment variable is set, Admin users can access the Ollama management interface by navigating to Settings > Manage Ollama.

This dedicated interface provides a centralized location for overseeing and interacting with your Ollama models.